IPv4

From $0.72 for 1 pc. 37 countries to choose from, rental period from 7 days.

IPv4

From $0.72 for 1 pc. 37 countries to choose from, rental period from 7 days.

IPv4

From $0.72 for 1 pc. 37 countries to choose from, rental period from 7 days.

IPv6

From $0.07 for 1 pc. 14 countries to choose from, rental period from 7 days.

ISP

From $1.35 for 1 pc. 23 countries to choose from, rental period from 7 days.

Mobile

From $14 for 1 pc. 18 countries to choose from, rental period from 2 days.

Resident

From $0.70 for 1 GB. 200+ countries to choose from, rental period from 30 days.

Use cases:

Use cases:

Tools:

Company:

About Us:

Web data collection supports personal research, competitive analysis, SEO, and digital marketing updates. Manual collection is time-consuming and error-prone. Web scraping tools like ParseHub help extract large volumes of data from dynamic sites quickly. However, large-scale scraping introduces challenges such as CAPTCHAs, IP-based access limits, and region-based constraints. Proxy integration with ParseHub helps collect data more efficiently while masking your IP address for privacy.

This guide explains why you should use ParseHub Proxies and provides a step-by-step guide to configuring the intermediary servers for an optimized experience.

Prefer a visual guide? Watch the video below for step-by-step configuration in the app.

Using a ParseHub proxy unlocks several advantages that enhance the frequency of your scraping activities. Below are some of the main benefits:

Many websites use rate limiting, CAPTCHAs, and IP-based filters to manage automated requests. Application can encounter these constraints during repeated requests. However, proxy integration with ParseHub, especially rotating ones, distributes traffic across multiple IPs, helping reduce detection and request denials. Rotating intermediaries change the IP address per request, making it harder to correlate activity to a single source.

Many websites vary content by geographic location, and some restrict access from specific regions. Using region-specific IPs lets the app request data from the required location, enabling collection of local pricing, SEO data, and region-specific products. Check out a recent article on how to use proxy to unblock websites for advanced experience.

Without a proxy, repeated requests originate from a single IP address, which can trigger detection and access limits. These limits may prevent further data collection. However, proxy integration with ParseHub helps maintain session stability by distributing requests and simulating typical user patterns.

When extracting large datasets, it is important to mask your IP address. Intermediaries mask IP and location, supporting privacy during data collection. This adds a layer of confidentiality, especially when working with sensitive datasets.

If you manage multiple projects or collect large datasets across many domains, a lot of separate IPs help by reducing account-related risks and minimizing session interruptions. This is useful for agencies, researchers, and businesses conducting market intelligence at scale.

The integration of new IPs into software remains consistent across Windows and MacOS, ensuring universal compatibility. Follow these steps to set it up:

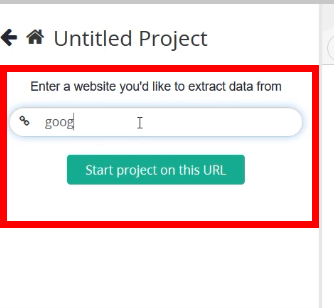

Step 1: Open the program and click the "New Project" button in the main window to create a new project for the intermediary server configuration.

Step 2: In the next window, enter the URL of the site you wish to parse in the left pane, then click "Start project on this URL".

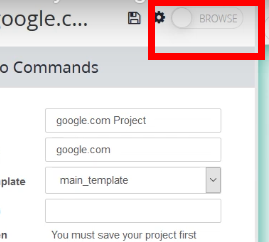

Step 3: Enable the “Browse” slider to start collecting data from the site.

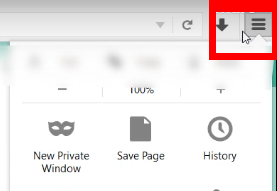

Step 4: To configure a new connection, access the browser menu by clicking the three horizontal lines in the top right corner.

Step 5: In the dropdown menu, select the “Options” gear button.

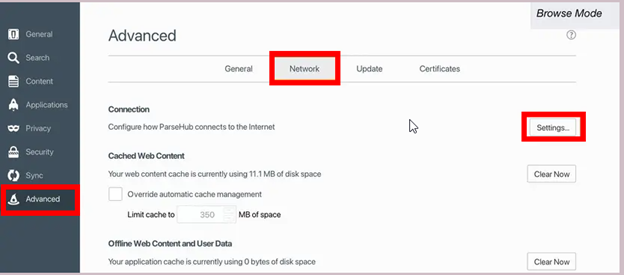

Step 6: In the “Options” menu, navigate to the “Advanced” section on the left, go to the “Network” tab, and in the “Connection” settings, click the “Settings” button.

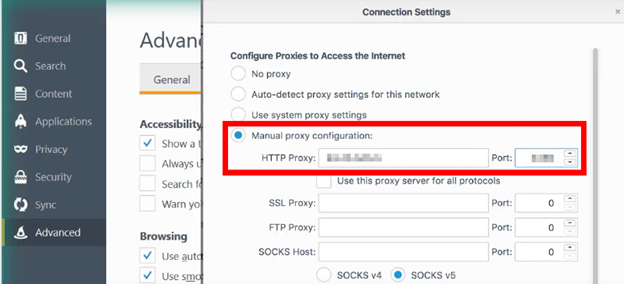

Step 7: In the following window, select “Manual Proxy Configuration” to enable fields for entering IP address, port, and authentication data.

Step 8: If you are using a premium server, you’d be prompted to enter the authentication details which includes the username and password.

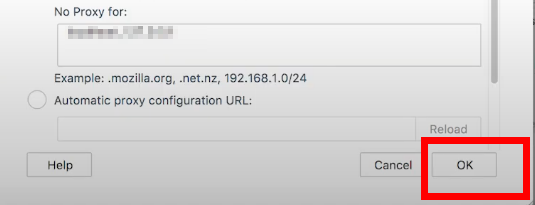

Step 9: Optionally specify exceptions by listing IP addresses or domains that should be excluded from proxy use. Standard ones do not encrypt traffic; for encryption, use a VPN or an HTTPS (TLS). After finalizing settings, click OK.

Configuring on a Linux device can be done in two ways: through a configuration file or using an API.

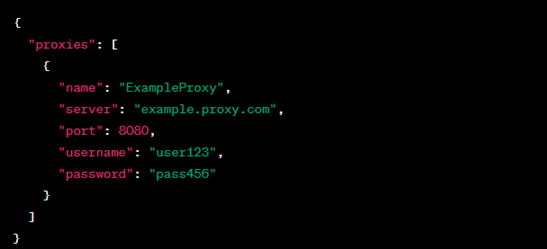

Step 1: Create a configuration file named proxy.json using any text editor. This file should include the name, server address, port, username, and password. Use the following template and enter it into your terminal:

{

"proxies": [

{

"name": "YourProxyName",

"server": "ProxyServerAddress",

"port": ProxyServerPort,

"username": "ProxyUsername",

"password": "ProxyPassword"

}

]

}

Step 2: Replace each placeholder with the details of the proxy server.

Step 3: Save the file on your PC. To launch the app with these settings, run the command parsehub “proxy/path/to/your/proxy.json” in the terminal, as shown in the provided screenshot.

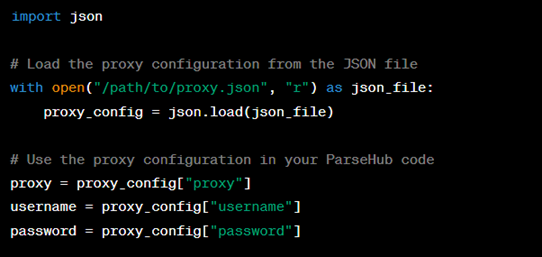

Step 1: Install the requests library by entering “pip install requests” in your terminal.

Step 2: Set up access to your ParseHub API keys. Replace the placeholders in the following template with your details:

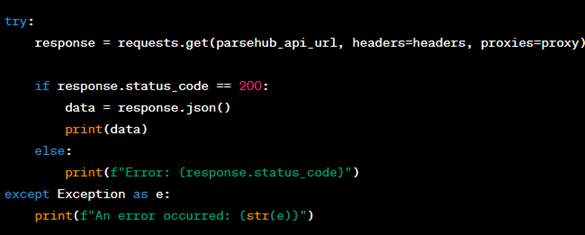

Step 3: Initiate a request to the app using these settings. For instance, the provided screenshot shows a code snippet for sending a GET request and processing the response.

Step 4: For private IPs, use the following code structure:

pip install requests

import requests

proxy_ip = 'IP address'

proxy_port = 'port number'

proxy_username = 'username'

proxy_password = 'password'

session = requests.Session()

session.proxies = {

'http': f'http://{proxy_username}:{proxy_password}@{proxy_ip}:{proxy_port}',

'https': f'https://{proxy_username}:{proxy_password}@{proxy_ip}:{proxy_port}'

}

url = 'https://example.com'

response = session.get(url)

print(response.text)

Refer to the example code and screenshot for guidance:

Once your settings and ParseHub API key are configured, the Python setup will work as expected. Using private proxies enhances security and privacy for web scraping and improves compatibility with ISP policies.

Proxy integration with ParseHub is a practical choice for data collection when you need to protect privacy, access region-specific data, and reduce IP-based access issues. Across Windows, macOS, and Linux, configuration in this app is straightforward and improves the efficiency and scalability of data collection.

Use high-quality rotating solutions with this software to maintain reliable access to real-time data from sites that rate-limit requests or restrict IPs. Combining proxies with this no-code tool helps maintain consistent results despite common anti-automation measures.

Note that while intermediaries help reduce IP-based restrictions and improve reliability, ParseHub’s controls over request headers and JavaScript emulation are limited. For advanced logic, pair the app with middleware or API post-processing.

No. It is a no-code tool for extracting website data. Although it can collect large volumes quickly, it still faces rate limiting and IP-based restrictions. Using new IPs helps manage rate limiting and collect region-specific data while reducing IP-related risks.

Yes, free solutions can be used with this tool. However, they are often unreliable, slow, and can carry security risks. They may result in failed collections and access restrictions. For large-scale workloads, consider private options.

Here are some best practices for integration with ParseHub: