IPv4

From $0.50 for 1 pc. 38 countries to choose from, rental period from 7 days.

IPv4

From $0.50 for 1 pc. 38 countries to choose from, rental period from 7 days.

IPv4

From $0.50 for 1 pc. 38 countries to choose from, rental period from 7 days.

IPv6

From $0.07 for 1 pc. 14 countries to choose from, rental period from 7 days.

ISP

From $1.35 for 1 pc. 23 countries to choose from, rental period from 7 days.

Mobile

From $15 for 1 pc. 17 countries to choose from, rental period from 2 days.

Resident

From $0.70 for 1 GB. 200+ countries to choose from, rental period from 30 days.

Hong Kong

Hong Kong

Use cases:

Hong Kong

Hong Kong

Use cases:

Tools:

Company:

About Us:

Hong Kong

Hong Kong

Web scraping, automated testing, monitoring changes, and browser management all require specialized automation software, and choosing between the available solutions can be complicated due to the large number of similar tools. However, when working with JavaScript-heavy websites, the choice usually comes down to two options – Puppeteer vs Selenium. They differ in architecture, how they interact with browsers, configuration flexibility, and performance.

This comparative review is based on the key parameters that are critical when selecting a tool.

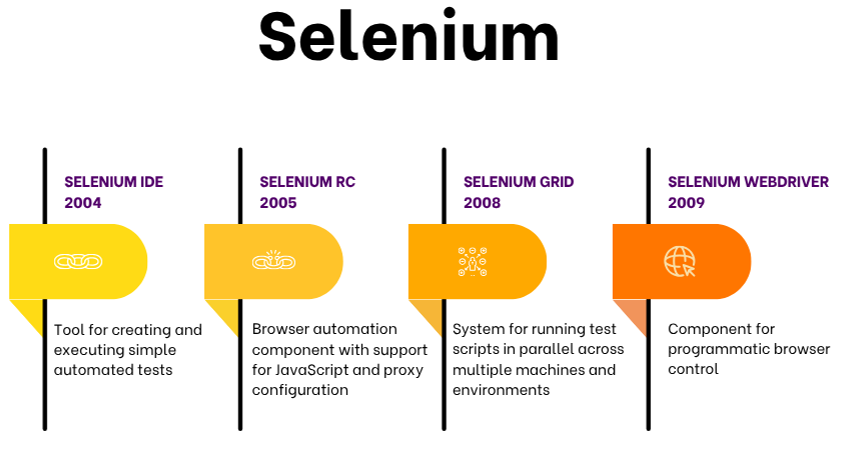

Selenium is a framework originally developed in 2004 for automating web application testing. Over time, its functionality has significantly expanded, and today it is widely used for web scraping, monitoring, automating user scenarios, and various web interface interactions.

It supports multiple programming languages and offers cross-platform and cross-browser compatibility, making it one of the most flexible automation solutions.

A distinctive feature of this library is its modular architecture. The suite includes:

Despite its broad capabilities, the platform has some limitations. The main ones are the technical complexity of the initial setup and a high entry threshold: effective work with the library requires programming skills and an understanding of the architecture and network communication principles.

It is an open-source library developed by Google for automating Chrome and other Chromium-based solutions. It leverages the DevTools protocol, enabling low-level interactions and high performance for tasks such as automating repetitive actions, extracting data from dynamic websites, testing web applications, monitoring changes, capturing traffic, and interacting with network interfaces.

It supports two main operating modes:

Unlike Selenium, Puppeteer is intended exclusively for JavaScript developers.

To understand which is better — Selenium or Puppeteer, it’s best to start with architecture, as it impacts performance, setup complexity, language support, and compatibility with browsers.

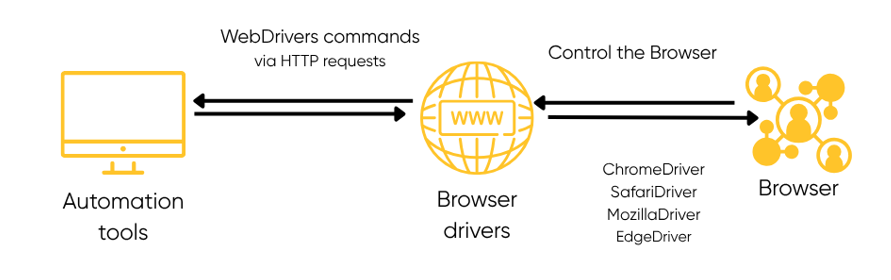

The core of Selenium is WebDriver — a component that manages the browser and interacts with it via an API. For correct operation, the WebDriver must strictly match the browser and operating system versions.

To integrate WebDriver into a project, developers must set up the environment, import the library for their programming language, install the appropriate driver, and create an instance that will perform all web page actions. Selenium sends HTTP requests to the driver, which interacts with the browser via native interfaces, executes commands, and returns results.

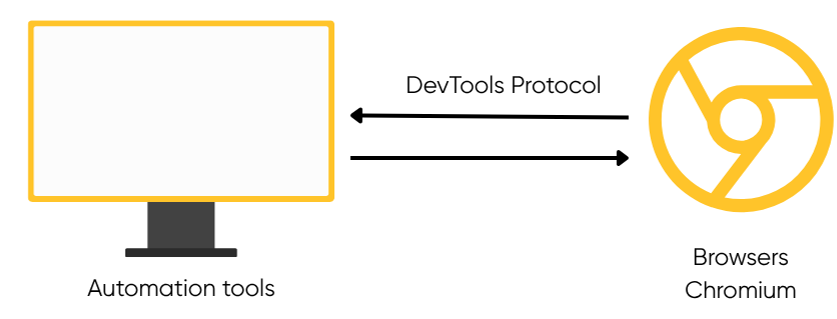

Puppeteer offers a different approach: it is based on the DevTools Protocol, a built-in low-level interaction mechanism. Commands are transmitted via WebSocket connections, ensuring high performance and precision. The user doesn’t need to install the protocol separately — it’s built into all Chromium-based solutions.

As we can see, the architectural differences between these two are significant. While Selenium requires explicit integration with external drivers and manual configuration, Puppeteer bundles a compatible Chromium version and works "out of the box" with no extra configuration required.

Selenium is compatible with Google Chrome, Firefox, Microsoft Edge, Safari, and Opera (with some nuances: Opera uses chromedriver).

Puppeteer was initially focused on tight integration with solutions based on Chromium. Firefox support was implemented in experimental mode and does not provide full access to all API features.

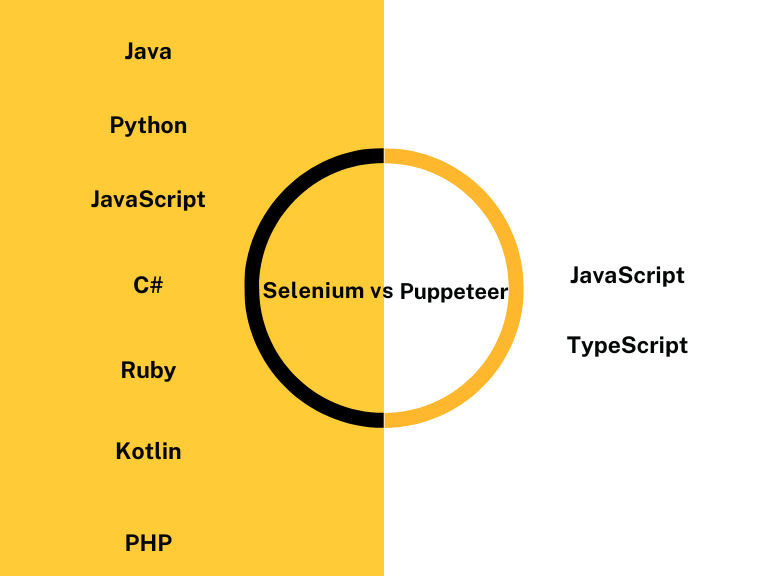

Selenium offers broad multi-language support, making it a universal solution for teams with different tech stacks. Official client libraries are available for Java, Python, PHP, C#, JavaScript (Node.js), Ruby, and Kotlin, allowing the tool to be integrated into diverse CI/CD pipelines and testing infrastructures.

Puppeteer is exclusively tied to the Node.js ecosystem and is compatible only with JavaScript and TypeScript. This approach is relevant for projects built on modern JavaScript frameworks but limits its use in environments that employ other programming languages.

It’s important to note that Python is a key language for automation and web scraping. In this context, Puppeteer loses to its competitor since it does not have direct Python support. The existing wrapper “pyppeteer” provided only partial compatibility. At the moment, it is not supported, so comparing Python with these libraries is not meaningful.

The performance of Puppeteer vs Selenium is directly determined by the architectural solutions at the core of each tool.

Selenium interacts with the browser via WebDriver—a middleware layer that consumes system resources. Puppeteer, on the other hand, uses the DevTools Protocol and communicates directly with the browser, which reduces delays and increases the overall execution speed, especially in headless mode.

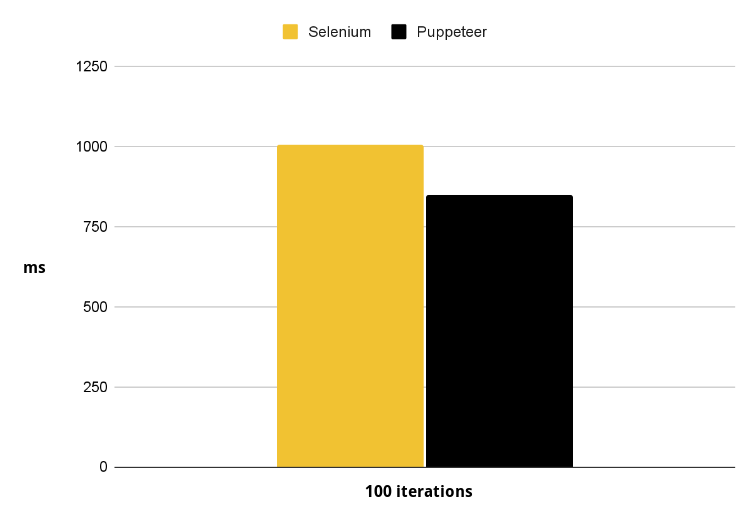

Consider an example. In a series of 100 tests on a PC with 16 GB of RAM and a 2.6 GHz processor, Puppeteer completed a scraping task in 849.46 ms, while Selenium took 1008.08 ms.

However, when it comes to speed in typical scenarios as above, the second tool (Selenium) may deliver more stable performance. Its architecture is less sensitive to updates: each WebDriver is developed for a specific browser version, which reduces the risk of incompatibility. Puppeteer relies directly on the DevTools Protocol, so any updates to Chromium can cause errors. This is especially important for long-term projects: due to libraries tight binding to the internal Chrome API, updates may disrupt compatibility. This requires regular library updates and additional testing after new Chromium releases.

When comparing Selenium vs Puppeteer, it is important to consider how they work with dynamic pages, support for headless mode, and the possibilities for integrating third-party solutions.

Headless mode allows you to run the browser without a graphical interface, which is especially useful for running automated tasks on a server or in CI/CD environments. Both tools support this mode, but they operate differently.

Puppeteer delivers higher execution speed and places less load on the system, especially when working with dynamic pages where fast DOM construction and updates are crucial.

Its competitor, despite increased resource consumption in this mode, ensures greater reliability for cross-browser automation and is better suited for complex tests where real user behavior needs to be emulated across different environments.

JavaScript is one of the main challenges in automation and scraping, as dynamically loaded content requires proper rendering.

Puppeteer is specifically designed for working with JavaScript. It efficiently tracks DOM changes, uses built-in waiting mechanisms for elements, and supports various user scenarios: scrolling, clicks, input, navigation, and other actions.

Selenium also interacts with JavaScript, but requires manual configuration of waits. This makes working with dynamic sites more complex without proper script setup.

Both solutions support mobile device emulation.

Puppeteer offers ready-to-use configurations for popular smartphones (for example, iPhone and Pixel), automatically setting parameters for viewport, user-agent, and touch events—this greatly simplifies implementing mobile simulation.

Selenium also allows you to mimic mobile device behavior, but requires more complex manual configuration through ChromeOptions or integration with third-party tools such as Appium.

In Selenium, proxies are configured via browser launch parameters or profile configuration, which solves basic tasks for bypassing geo-blocks and limits.

Puppeteer offers more flexible options. In addition to proxy settings, it allows you to dynamically change the user-agent, manage headers, and use stealth plugins to mask automated actions on web pages.

For easier perception of the above differences between the tools, the following table summarizes all key characteristics.

| Criterion | Selenium | Puppeteer |

|---|---|---|

| Browser Support | Chrome, Firefox, Edge, Safari, Opera | Only Chromium, Firefox (experimental) |

| Language Support | Java, Python, JS, C#, PHP, Ruby, Kotlin | Only JavaScript and TypeScript |

| Architecture | WebDriver, interaction via HTTP API | DevTools Protocol, WebSocket, direct control |

| Headless Mode | Supported, but uses more resources | Faster, more efficient, less load |

| JavaScript/DOM | Requires manual waits | Built-in waits, stable with dynamic content |

| Mobile Emulation | Via ChromeOptions/Appium | Ready-made device presets (Pixel, iPhone, others) |

| Proxy and Bypass of Restrictions | Standard browser proxy settings | Flexible parameters, stealth plugin support |

| CI/CD Integration | Supported by most pipelines | Convenient in JS ecosystem, limited elsewhere |

| Performance | Lower due to intermediary WebDriver layer | Higher, especially in headless mode |

| Reliability | Higher: less dependent on browser version | Dependent on Chromium updates |

Both frameworks have found practical use in a variety of tasks — from interface testing to large-scale data collection. The choice depends on project specifics, the volume and type of data being processed, the target browsers, and performance requirements. Below are the most common scenarios where these tools are applied in real projects.

When comparing Selenium vs Puppeteer for web scraping, it is difficult to identify a clear leader — it all depends on the specifics of the task and the required depth of site interaction.

Selenium extracts data through WebDriver, controlling the browser at the DOM level. This is suitable for collecting text, tables, links, and also for working with forms (input, submission), managing cookies, and navigating through the site. However, when dealing with dynamic JavaScript content, it requires fine-tuning of waits, and for media downloads or taking screenshots, you will need additional libraries.

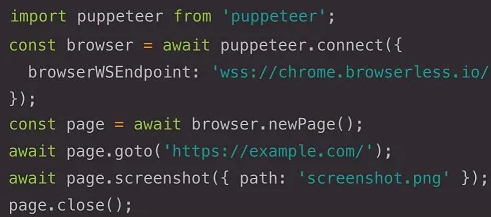

Puppeteer, on the other hand, interacts directly with the browser through the DevTools Protocol. It natively supports text extraction, JSON and HTML data, images and videos, can work with forms, create screenshots and PDF documents, and emulate user actions. It also includes built-in tools for bypassing basic anti-bot protection, including proxy support. Proxy settings can be configured programmatically via browser launch parameters or page.authenticate(). More on this: how to set up proxies in Puppeteer for web scraping.

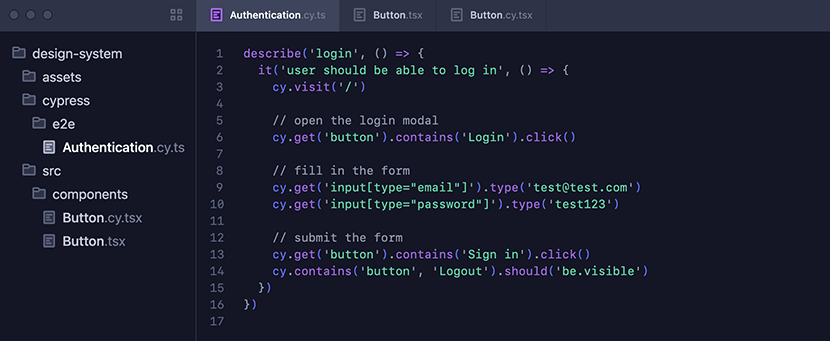

Selenium is widely used for testing web applications in different browsers and operating systems. It supports UI tests, forms, navigation, authentication, behavior at different screen resolutions. Thanks to compatibility with Appium, it can also be used to test mobile apps (iOS/Android). This makes it a great choice for cross-browser and cross-platform testing.

Example of E2E authentication test written in TypeScript:

Its competitor is more focused on testing web interfaces, especially for JavaScript/SPA-based applications. Puppeteer is well-suited for rendering checks, loading elements, DOM manipulation, and running user scenarios. However, it is limited to the Chromium ecosystem.

Example of using Puppeteer to remotely connect to a browser and take a screenshot of a web page:

Thanks to fast headless launch and native JavaScript support, Puppeteer is well-suited for monitoring changes in dynamic interfaces — such as tracking price updates, new products, reviews, or comment updates, as well as changes to DOM elements on SPA sites. It lets you set up scheduled checks and can automatically save screenshots, HTML, or export information about updates.

Selenium is also capable of monitoring, especially when interaction with different browsers or a more stable environment is needed. However, under heavy load and frequent checks, it may be outperformed in speed and resource consumption.

After reviewing the key differences between Puppeteer and Selenium, it’s possible to highlight the main pros and cons of each.

Advantages of selenium tool:

Weaknesses:

Strengths:

Weaknesses:

If the main task is web scraping, Puppeteer is usually the better choice. Its headless mode ensures fast loading, and direct management via the DevTools Protocol enables effective work with dynamic content. It easily integrates with proxies, allowing not just IP rotation but also management of headers and cookies, which is essential for bypassing protection systems and regional restrictions. It is also suitable for tracking changes, thanks to rapid DOM updates and instant reactions.

Selenium is also suitable for web scraping, including extracting text, working with tables, forms, and cookies. However, additional libraries are often required to handle media or create screenshots. This library's main advantage is cross-platform and cross-browser support, making it irreplaceable for automated testing. AQA teams can use Selenium to run advanced and comprehensive testing, check compatibility across browsers and devices, and implement change tracking in CI/CD pipelines.