IPv4

From $0.72 for 1 pc. 37 countries to choose from, rental period from 7 days.

IPv4

From $0.72 for 1 pc. 37 countries to choose from, rental period from 7 days.

IPv4

From $0.72 for 1 pc. 37 countries to choose from, rental period from 7 days.

IPv6

From $0.07 for 1 pc. 14 countries to choose from, rental period from 7 days.

ISP

From $1.35 for 1 pc. 23 countries to choose from, rental period from 7 days.

Mobile

From $14 for 1 pc. 18 countries to choose from, rental period from 2 days.

Resident

From $0.70 for 1 GB. 200+ countries to choose from, rental period from 30 days.

Use cases:

Use cases:

Tools:

Company:

About Us:

Extracting large volumes of data often requires more advanced tools than basic scrapers or browser add-ons. This ParseHub review examines how the platform manages complex site structures, session-based navigation, and automated workflows. It’s a powerful web scraping tool designed for projects that demand consistency, scale, and flexibility – without the need to build custom scrapers from scratch.

It is a data collection tool that lets users extract content from websites using a visual interface. It is aimed at non-technical users but also includes support for low-code logic such as XPath and regex, enabling precise targeting.

Often used for the web scraping process, it emphasizes ease of use through a simple dashboard. The tool detects layout patterns and applies them across similar pages, supporting features like pagination, login forms, and dropdowns. It’s widely used in research, business, and reporting tasks.

ParseHub pricing follows a freemium model. The free plan supports up to five public projects with basic scraping limits, making it appropriate for testing or smaller-scale tasks.

Paid plans, Standard and Professional, offer more pages, projects, and features like IP rotation, cloud storage, and scheduling.

Each option supports different ParseHub web scraping needs, from light use to advanced info extraction. Exports are available in multiple formats, and custom enterprise plans are available on request.

This application runs on Windows, macOS, and Linux. It’s available as a desktop program and also offers a browser-based version. Users can log in from any machine to access saved projects and continue work without a full installation.

As a cross-platform web crawler, it supports teams who need to manage scraping jobs across devices. This helps maintain consistent access to information, whether working locally or remotely.

One of the core strengths of ParseHub is its compatibility with third-party services. Collected information can be exported to Dropbox or Amazon S3, though integration must be manually configured within project settings.

It also integrates with tools like Parabola to enable multi-step automation flows. These features support repeatable, large-scale data automation without constant user input. Scheduling options further reduce manual workload.

Users can reach out via email for account or technical issues. There is also an extensive help center that covers setup, API instructions, and common troubleshooting topics.

The documentation walks users through selecting elements, building workflows, and configuring settings.

In addition, support articles explain how to use the tool effectively as an HTML parser, helping extract data directly from page elements with precision.

It offers a variety of functions to support consistent and large-scale web data collection.

It is designed for users who need to collect structured info without coding. Its point-and-click interface allows selection of items directly from a webpage, which are then automatically recognized and tracked across similar pages.

Many modern pages rely on dynamic content, such as menus that open with clicks or sections that load when scrolled. ParseHub can handle these cases by simulating actions like dropdown selection, scrolling, and input form completion.

It supports interaction with many login-protected and AJAX-based websites, although handling may be limited on highly dynamic or protected platforms.

Because of this, the tool can retrieve info from sources that traditional tools may not access without manual effort.

The platform lets users collect various content types, including text, images, file URLs, and page attributes. This allows for organized collection across multiple page layouts or sections.

It’s especially useful for data mining tasks that involve retrieving structured records at scale, such as product listings, articles, or public directories.

Advanced targeting options are available through CSS rules, regular expressions, and XPath selectors, giving users control over exactly which elements are captured.

All activities are processed remotely, which means the scraping tasks run even when the user is offline. As a cloud-based scraper, the tool guarantees continuous info collection without needing to keep the desktop client open.

The platform includes optional support for IP address rotation, which can reduce blocking when gathering large datasets. This is particularly effective when used with a job scraping proxy for distributing requests across multiple IPs. Using a proxy in ParseHub helps maintain access to target sites during high-volume scraping tasks by distributing requests across multiple IPs.

With scheduled scraping, tasks can run at set intervals, such as hourly or weekly, with no manual action needed. For developers or advanced use cases, the tool offers an API and webhook support, which allows integration with reporting systems or external databases.

These functions improve automation and enable the use of structured data in broader workflows.

It lets you grab info from websites without writing any code. Just load a page, click what you need, and let it do the rest. Here’s how to get started, step by step.

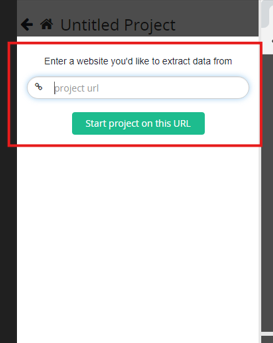

Step 1: Enter the Target URL

After launching the desktop app, paste the URL of the web page that contains the data you want to extract.

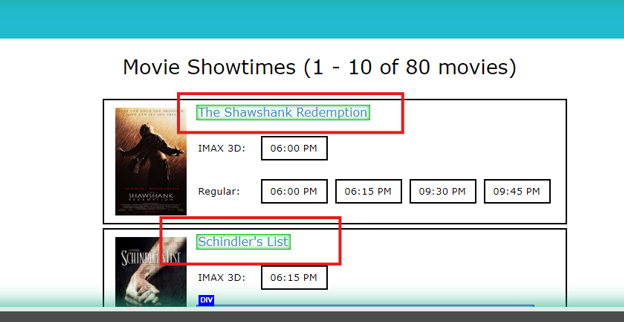

Step 2: Load the Page and Select Data Elements

Once the page loads inside the interface, click on the elements you want to scrape. ParseHub will automatically detect and group similar elements.

Step 3: Add Actions for Navigation or Interaction

If the page requires interaction (like logging in, clicking buttons, or scrolling), add corresponding actions to your workflow. Parser supports form filling, pagination, and dynamic content loading.

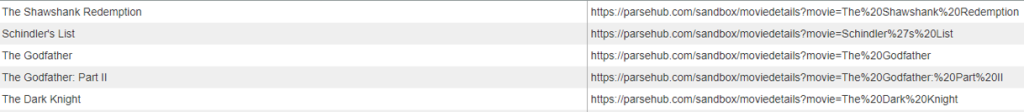

Step 4: Build and Review Your Workflow

The actions you add form a visual flowchart. This defines how the parser will move through the site and extract info. You can adjust the order and logic as needed.

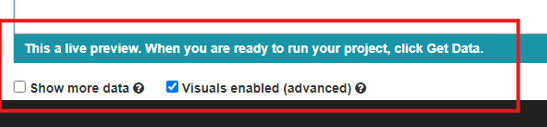

Step 5: Preview Your Data Extraction

Use the Preview tab to check if the correct info is being selected. This step helps you validate your setup before running a full scrape.

Step 6: Run the Project (Locally or in the Cloud)

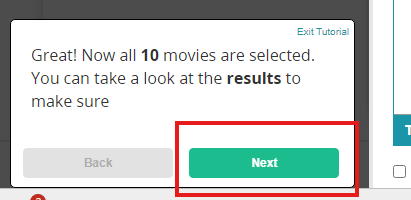

Click “Next” to start the scraping job. You can choose to run it locally on your computer or remotely in the cloud (ParseHub’s servers).

Step 7: Export the Results

Once the job finishes, export your data in CSV, Excel, or JSON format. You can download the results directly from the parser interface.

Step 8: Set Up Scheduling and Integration (Optional)

For automated scraping, enable scheduling. You can also connect exports to cloud services like Dropbox or send results to an API.

It provides a straightforward way to extract information from websites, but it comes with both advantages and limitations depending on user needs.

For users focused on general web data extraction, the tool performs reliably. However, larger teams or high-volume tasks may require advanced planning to avoid plan limitations.

ParseHub’s visual interface, cloud processing, and scheduling options make it practical for recurring scraping tasks. Although the free plan is restricted, paid plans provide automation, IP rotation, and flexible export options. It may not be suitable for highly customized workflows, but it performs reliably for standard data extraction.

The interface is built for simplicity. Most users can begin small projects quickly by following on-screen instructions or using available tutorials. A ParseHub tutorial section is also included to assist with setup.

Yes. With features like scheduled scraping and cloud execution, users can set up jobs to run automatically at regular intervals without manual input.

The platform stores information securely and provides encrypted access through API keys and account controls.